Android Q's speech recognition aims to protect your privacy too

Google's live captions listen to you but will keep your conversations off the cloud, the company says.

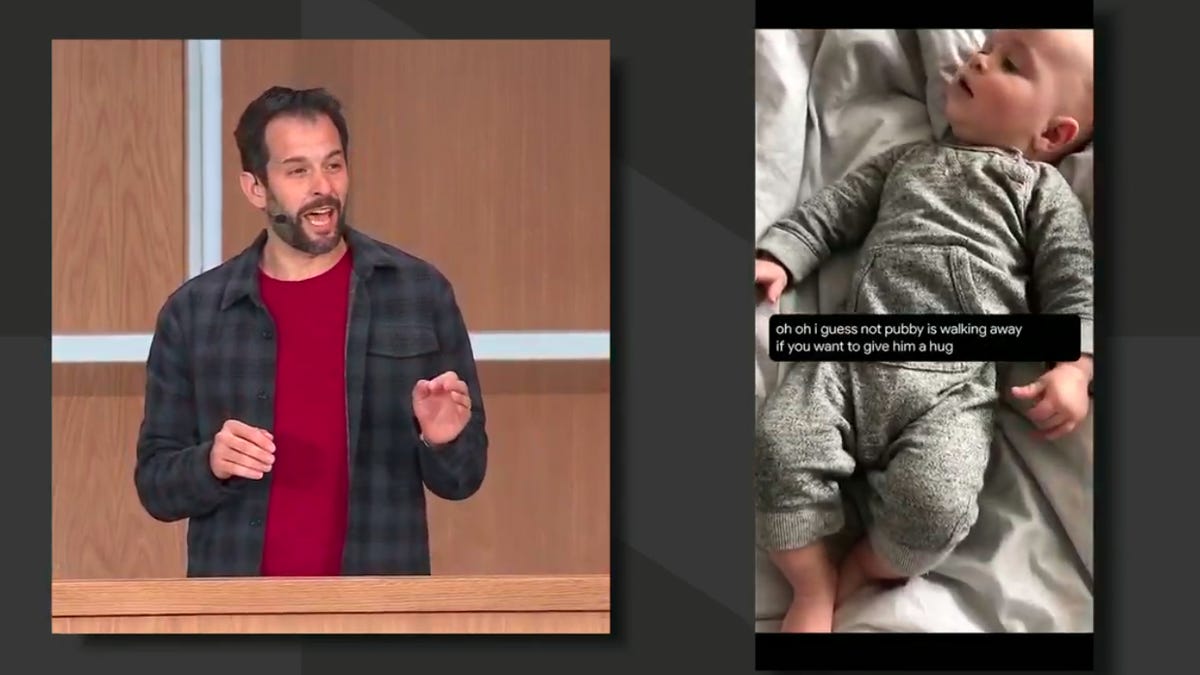

A Google presenter describes the new Android feature that captions videos in real time.

Google unveiled new Android Q features Tuesday that it says can caption home videos in real time, with no internet connection needed. It's possible because Google's speech recognition software requires less processing power, so it can run on your phone or tablet, Google said.

That comes with an added privacy benefit. Instead of streaming audio from your device to Google's cloud servers, the content of your conversations stays on your device.

"No audio stream ever leaves it," Google's Stephanie Cuthbertson said at the Google I/O developer conference Tuesday. "That protects user privacy."

That means Google's machine learning software is getting more efficient, and one benefit for users is increased privacy. It's a notable advance as major tech companies come under scrutiny for how much of our conversations they record and store on their own servers, and as Google itself is consolidating more data about users with its smart home products.

Machine learning software is typically resource intensive. That's because it has to analyze large amounts of data, like audio streams or language patterns in texts or emails, and respond immediately with things like captions, audio responses or suggested replies to emails -- all things Android devices can do.

The on-device machine learning is possible because of a "huge breakthrough in speech recognition" Google made earlier this year, Cuthbertson said. It applies to all of Google's real-time captions, including for video you watch in apps or in a web browser. That lowered the processing power needed to run the software enough that Android could run it on your phone without quickly draining your battery.