Why You Can Trust CNET

Why You Can Trust CNET Can Samsung's AI upscaling really make TV images better?

Artificial intelligence and machine learning aim to bridge the resolution gap between 4K and 8K TVs and the video actually available today.

When I first heard Samsung talk up AI upscaling in its 8K TVs, I assumed it was the age-old marketing practice of smashing a new buzzword onto some old tech and proclaiming it super-fancy.

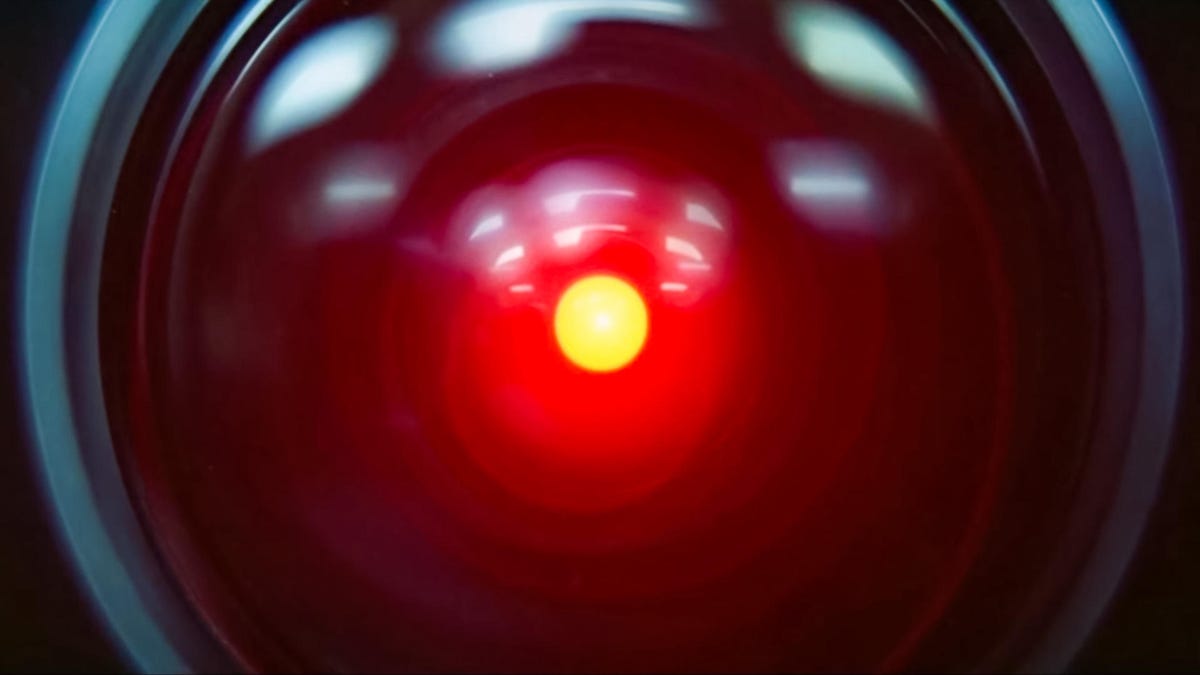

That might not be the case. While adding Artificial Intelligence isn't going to suddenly make your TV smarter, or sentient (yet), there are theoretical benefits to AI-enhanced video. How much actual improvement remains to be seen -- we haven't so far reviewed any TVs with this feature. But the possibilities are intriguing.

Tech firms employ artificial intelligence in various ways -- from facial recognition to digital assistants like Alexa and Siri to helping advance education and medicine. Using it to improve image quality -- specifically the necessary conversion from lower resolution video common today to higher 4K and 8K resolutions on TV screens -- is something new, and a big focus for Samsung. The company is including AI upscaling in its 8K and 4K QLED TVs this year.

One big reason to care about upscaling technology is because high-resolution video, in particular 8K, is still a few years away. We don't even have widespread 4K TV broadcasts yet in the US. Until they become more common, you'll still be watching a lot of 1080p and lower resolution sources on your shiny, new 4K or 8K TV. Improved upscaling can make those sources look better, especially on larger screens.

Chief Samsung TV rival LG is doubling down on "AI" too. It's using the term in 2019 TVs for convenience features like Google Assistant and smart home integration, as well as for image-quality enhancements. Sony , on the other hand, doesn't call its picture processing "AI," yet it functions in a somewhat similar way to Samsung's AI upscaling.

So how does Samsung's technology work, and why is it more intriguing than standard marketing-speak? Let's start at the beginning.

What is scaling?

All modern televisions have a set number of pixels, or individual picture elements -- tiny blocks that blend together to create the picture. This is their resolution, and is usually referred to as 1080p, Ultra HD 4K, or now 8K. Your current TV, if it's a few years old, is likely 1080p. This means it has 1,920 pixels across, and 1,080 pixels vertically. An Ultra HD 4K TV has twice that number, both vertically and horizontally, and 8K has twice that.

As you can see, non-4K sources, like virtually all broadcast and cable television, have far fewer pixels than 4K TVs. In the case of 720p sources, like Fox and ABC, there's only one-ninth the number of the pixels needed to fill a 4K TV. If these were shown on a 4K TV without scaling or upconverting, it would just be a tiny image in the center of the screen, surrounded by massive black bars.

To create an image that fills the screen, a TV has to have data for every one of those pixels. If you send a 1080p TV the video from a 1080p Blu-ray, there's no problem. The source, Blu-ray, has the same number of pixels as the TV.

The challenge is when not all content has the same resolution as your TV. When you play a DVD, with its resolution of 720x480 on your 1080p TV, the TV has to create all-new pixels to fill the screen. The same is true on 4K TVs when showing 1080p video. Since 4K TVs have twice the horizontal and vertical resolution, they need four times as many pixels to create an image.

1080p on a 4K TV without upconversion would have a lot of black space.

This process of creating new pixels is called upconversion, upscaling, or just scaling. They all mean the same thing, and it's essentially "zooming in" on a low-resolution image, so it can fill a larger, high-resolution screen.

There are many ways to do this, but the simplest to understand is just creating additional, identical pixels from each original. For 1080p to 4K upconversion, you'd need four pixels from each original one.

In the simplest form for scaling, the original pixels are duplicated to fit the required higher resolution. So the new 4K signal has four pixels, for example, to the HD original's one. More advanced versions of scaling do more to determine what the new pixels should be.

Scaling technology advanced as processors got faster, and TV companies looked for additional ways to improve image quality. Instead of something simple like a one-into-four pixel conversion, processors look at the adjacent pixels to determine what the new pixels should look like. This allowed for sharper, more defined edges. Sharper, more defined edges in turn appear to the eye like more detail. Or to put it another way, your DVD doesn't look quite as soft as it would have with older or cheaper scaling methods.

Fast-forward a few more years and processors were looking not just at adjacent pixels in each frame, but at multiple frames to look for motion, noise and many other factors to create an image with as much detail as possible, while keeping video noise and other picture artifacts at a minimum. This is an oversimplification, but we talk about the process in more detail in Why your 4K TV is probably the only 4K converter you need.

It turns out that determining the right amount amount of sharpness , noise reduction, and other factors is a job AI can potentially do better.

CNET's David Katzmaier reads the fine print on an 8K Samsung TV.

Enter artificial intelligence and machine learning

Typically, engineers designing a TV use their own secret sauce to get the best image out of their company's latest video processor. They'd would determine what combination of sharpness enhancement, noise reduction, and so on would look best with the widest variety of content.

Generally whatever they came up with would have to be a sort of "one size fits all," in that it would have to look "good" with all content. Even if slightly different settings might look great with some images but worse with others. Maybe sports would look better with more edge restoration, but movies would look unnatural. Or movies would look better with more noise reduction, but live TV would look soft. These specific settings are called filters.

Though it would be possible to have a handful of different filters in a TV, finding the right one requires too much fiddling from users. Can you imagine having a selection of 10 different filters and needing to figure out if Sport no. 7 or Sport no. 8 is better for the blistering action of the latest snooker championship? No one would do that.

The various steps in Samsung's AI Upscaling process.

Enter AI. There are two aspects to Samsung's AI upscaling. The first is machine learning. Video content -- lots and lots of video content -- gets analyzed by computers. From this, new filters are created. There might be a filter for football, one for auto racing, one for reality TV, one for action movies, one for dramas and so on. There's even, likely, different filters for 1080p sports versus 4K sports. Samsung claims they can have "over 100" different filters.

These filters would be stored in each TV. That brings us to part 2. When you're watching something, the TV will analyze the image and determine what you're watching. It will then apply the filter it determines will look the best, automatically. If those engineers (and their computer colleagues) figure out a even better filter, they can even send that to the TV via a software update.

In theory, a specific filter will create a better-looking image than a generic filter. How much better? Well, probably not a huge amount. It'd be impossible to say exactly, but between high-end TVs of the same brand, the one with the AI upscaling might look a little better on certain content. That comparison is impossible to make, though, because it's likely an entirely different processor in the two TVs.

LG, Sony, and others

Samsung is certainly not alone, though they seem to be the first to combine these techniques in this specific way. LG and Sony, for example, each have their own methods that are similar.

LG's AI ThinQ is an umbrella name for multiple "AI" technologies that include voice recognition and voice-controlled assistants, which isn't what we're talking about here. LG also uses AI to augment the image quality, albeit in a slightly different way than Samsung. According to LG, a "deep learning algorithm" looked at over 1 million pieces of content. This lets the TV analyze what you're watching and select the best overall settings for that particular content. They're calling this Deep Learning Picture.

This is, it seems, a sort of hybrid of the old method and Samsung's AI method, in that it uses AI to determine the best of what the engineers have implemented in the TV. However LG's version will also include aspects like taking into account the ambient light ("AI Brightness") in the room, similar to what most smartphones do. Though not exactly what we're discussing here, the TV will also analyze and adjust the audio depending on the content. So essentially it's all a bit like more advanced, smarter "Auto" picture and sound modes.

Sony doesn't call its processing "AI" anything, but its 4K X-Reality Pro works similarly to Samsung's AI upscaling, drawing on an extensive image database to inform its image correction. Sony's term is "dual database processing." One database is used to determine what parts of an image need noise reduction, and another database is used to determine what parts need detail enhancement.

The video image is analyzed and compared to, as Sony claims, an "evolving" database of images, to determine how best to apply noise reduction and detail enhancement.

As image processing becomes better, faster, and cheaper, expect to see more of this "smart" image processing from other companies. There's no way any TV manufacturer can know everything everyone will show on their TV, but they can design a processor that's smart enough to determine what it is you're watching, and make sure the TV looks its best showing it. That in itself isn't new, but how well TVs do it continues to get better.

Scaling to the future

Video scaling has come a long way, but it's no substitute for the real thing. While we wait for more actual 4K and 8K video, however, it's worth noting how much "easier" it is for the TV when you start with quality content.

HD-to-8K conversion requires a similar level of pixel creation as did DVD-to-HD conversion. But if you start with HD content, and add the abilities of modern processing, an 8K TVs will likely look better with non-8K content than early HDTVs did with non-HD content.

Going forward, expect more companies to adopt some version or variation of AI upscaling. It's another small step in the endless marathon to improve image quality. A little scaling improvement here, a dash of high dynamic range there, and all of a sudden TVs look much better than they did even a few years ago. That's progress.

As well as covering TV and other display tech, Geoff does photo tours of cool museums and locations around the world, including nuclear submarines, massive aircraft carriers, medieval castles, airplane graveyards and more.

You can follow his exploits on Instagram and YouTube, and on his travel blog, BaldNomad. He also wrote a bestselling sci-fi novel about city-size submarines, along with a sequel.